Introduction

I recently completed the final technical project for my masters degree, the aim of which was to create a system that could create convincing reverberation at runtime. The idea for this came from a discussion I had with a developer regarding procedurally generated levels in 3D games. We discussed the difficulty of using traditional methods such as reverb volumes when the level geometry was unknown until runtime, and the idea of using ray casting to approximate dimensions and calculate early reflections occurred to me. This approach is not a new idea by any means, with Google Resonance, Steam Audio and many others offering similar functionality. I wanted to see if I could create such a system entirely within Unreal Engine 4, without any reliance on third party plug-ins or software. It was also imperative that I created a system that was parametrisable, as sound design in interactive games is often so much more than simulating real life. I wanted to allow a sound designer using the system to over or under emphasise reverberant characteristics to suit the needs of their project. Here’s what I came up with.

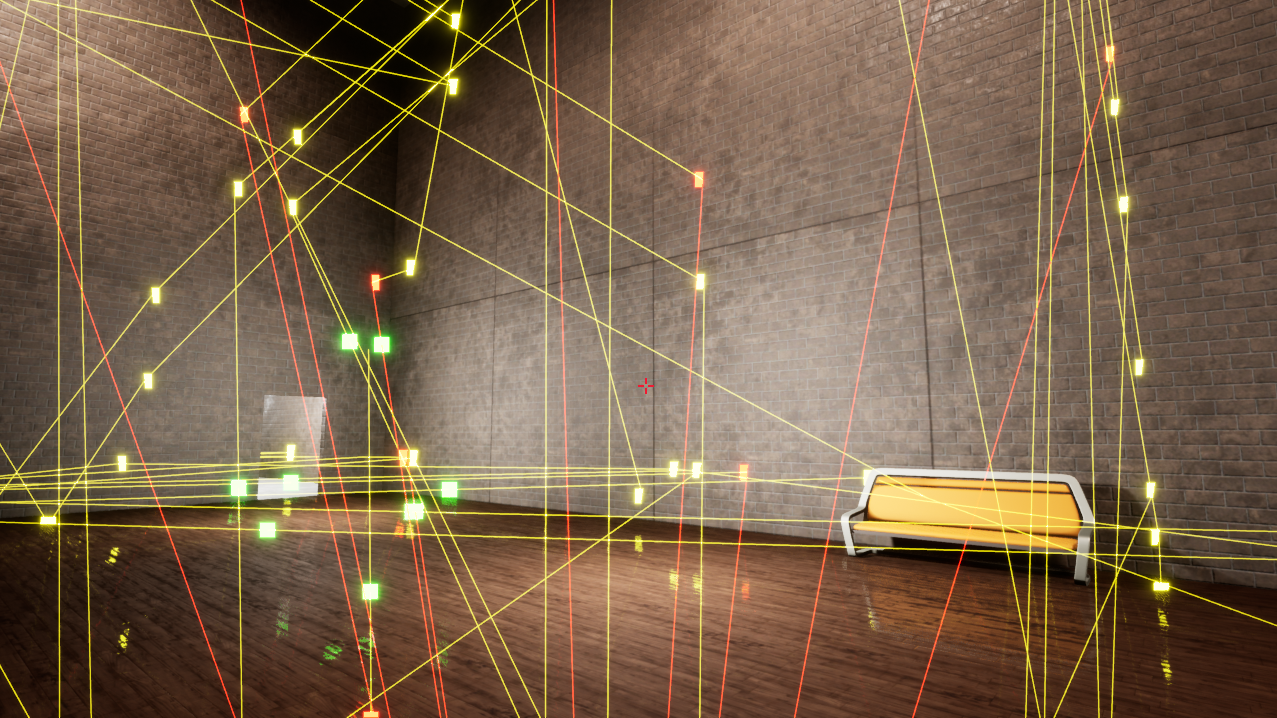

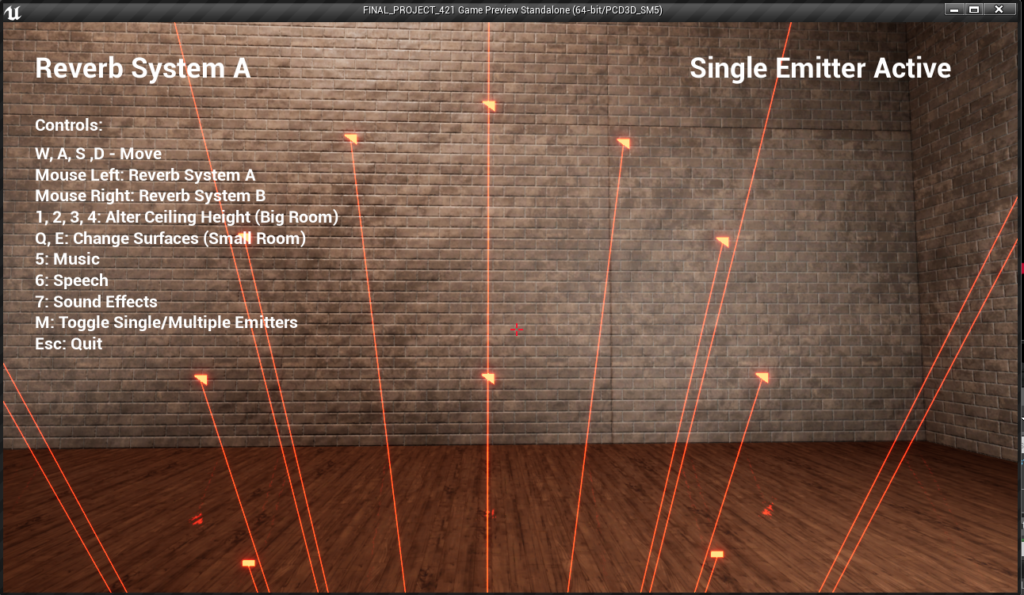

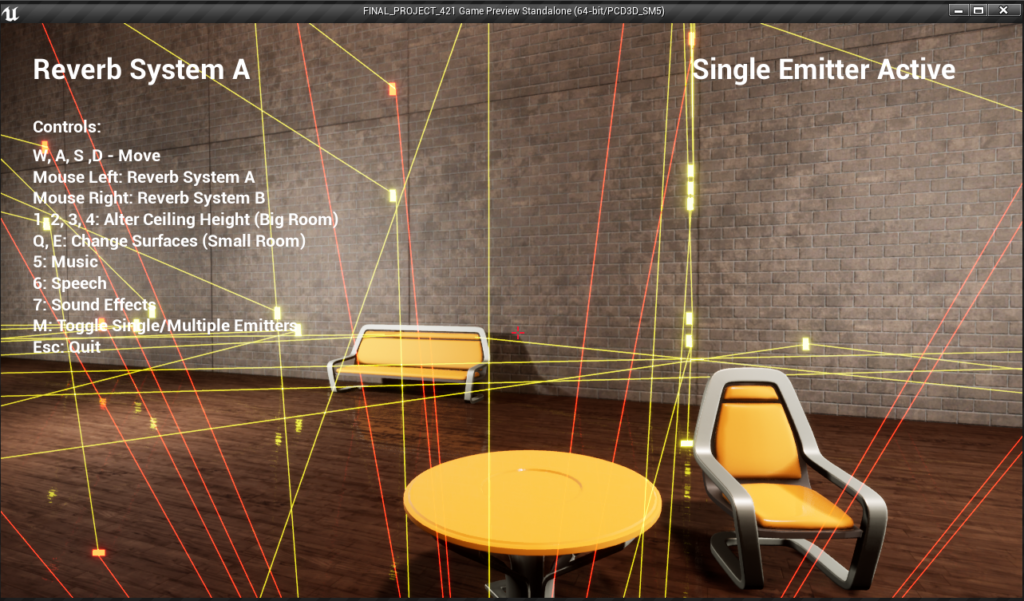

Demonstration

The video above shows the system at work in a test level I created. It shows the way in which the reverb system responds dynamically to changes in room dimensions and even construction materials. Now we’ll take a look under the hood to see how it works.

Overview

The system can be broadly split into two parts. There is the early reflection system which models the first few discrete echoes the player hears, and the late reflection system which models the tail of the reverb. The new audio engine in Unreal Engine 4 allows both of these components to be modelled using realtime audio effects. Multi-tap delays are used for early reflections, and the reverb effect is used for late reflections. The parameters for these effects are set using a series of ray casts (or line traces, to use Unreal Engine terminology) to ascertain the volume, construction materials and paths of reflection within the space. The amount of late reflections will also increase as the player gets further from the emitter for added realism.

Late Reflections

The first batch of line traces are emitted in 360 degrees around the player. These traces are used to estimate the volume of the space, and also to ascertain the material types of the walls, floor and ceiling. Upon colliding with the level geometry, the physical surface material types are stored, along with data such as the impact points and impact normals.

The physical surface material types each pertain to an absorption coefficient, which essentially dictates how much sound energy is absorbed by the surface. The system functions by averaging the absorption coefficients of all surfaces collided with during the first batch of line traces, then uses this in conjunction with the volume of the space to calculate the reverberation time using Sabine’s equation. This is then used to set the reverb time parameter on the reverb sub mix effect. The high frequency decay and high frequency gain parameters are also scaled according to the average absorption coefficient, which makes more reflective surfaces sound brighter.

Early Reflections

Early reflections are calculated by taking the initial batch of line traces and ‘bouncing’ them around the environment. When a line trace overlaps a sound emitter, a valid path of reflection is established. The relevant delay time is then calculated and stored in the emitter. These delay times are then used to set the multi tap delay effects. Panning is also preserved by using the initial line trace angle, meaning that the system will also adapt to player rotation.

Using The System

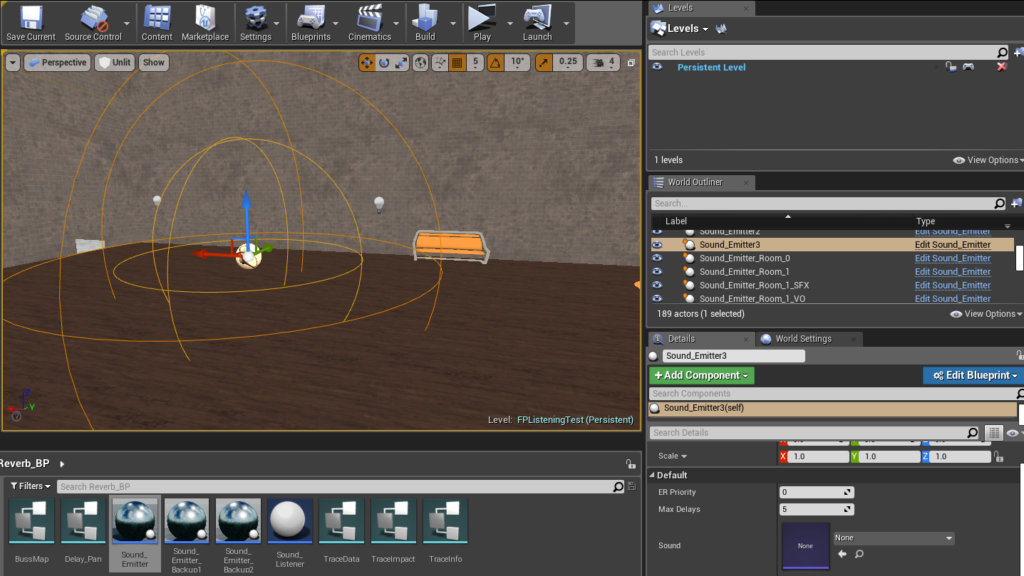

Using the system is fairly straightforward, as it essentially functions using only two blueprint classes. The Sound_Emitter BP class is used for all sound sources, and the Sound_Listener BP class should be added to the level once. The Sound_Listener will automatically track the player’s location and emit line traces. The Sound_Emitter should be used in place of the AmbientSound actor. It contains an audio component for playing back sounds, a collision sphere for detecting line traces, and is also used to store and set all parameters relating to the early reflections. Upon adding a Sound_Emitter to a level, the designer can easily set the sound to be played using the details panel. Attenuation settings can then be set in the usual manner within the sound cue.

In order to ensure the system functions correctly, it requires a dedicated collision channel called ‘reverb’. All walls, floors and ceilings should be set to block this channel, the collision spheres on the sound emitters should be set to overlap and all other actors should be set to ignore it. This ensures that the reflection paths and room size estimation are not affected by props and other actors. Custom collision channels can be configured within the project settings.

The user must also configure the required physical surface types within the project settings, and also assign each of these an absorption coefficient within the Coefficients dictionary, which is contained in the Sound_Listener BP. These surface types can then be added to physical material types and assigned to the level geometry in the usual manner.

The system will also function alongside the traditional method of using fixed audio volumes, and can be activated and deactivated globally by calling the events Activate Reverb System and Deactivate Reverb System in the Sound_Listener blueprint.

Reverb Priority

All emitters within audible range will be sent to the late reflection sub mix, however only four emitters can have early reflections simulated simultaneously. This is because each emitter needs its own dedicated multi-tap delay effect in order to adequately simulate the early reflections, and so the number of emitters that can use this system must be limited. Allocation is handled by a priority system whereby the designer can set an integer priority for each emitter in the details panel. When more than four emitters collide with the early reflection line traces, the four with the highest priority will be used. This system is also dynamic, so if an emitter becomes no longer prioritised it will fade itself out of the early reflection system without audible artefacts.

Customisation

The overall reverb time can be scaled by setting the public variable RT60Scale in the Sound_Listener. This acts as a multiplier, so a value of 1 leaves the value unchanged and 0.5 would result in reverb times half as long. The number of times the line traces bounce around the environment can be set using the Max Order Of Reflection public variable on the sound listener. Higher numbers of bounces will identify more valid reflection paths but at the expense of additional CPU bandwidth, so this can be tweaked to find an optimal compromise based on the target hardware. The balance of early reflections can be set using the ERTrim Global DB public variable in the Sound_Listener. This allows for early reflections to be boosted or attenuated globally in decibels. A value of 0 leaves the balance unchanged, every +6dB will double the level and every -6dB will halve the level.

Future Developments/Improvements

Currently the system updates at a fixed rate of 0.5 seconds. Whilst this seems adequate, it would be good to allow the designer to set a custom update rate to allow for better optimisation. Similarly, there are probably optimisations that can be made in terms of the number and distribution of line traces. I plan on allowing the designer to set the number of line traces using a single variable, then have the system calculate the relevant trace angles automatically for an even distribution. It would also be beneficial to expose the variables for the reverb scaling over distance to the user, as this would allow the designer to control the overall reverb mix in a more controlled way. It could also be beneficial to have it scale according to a customisable curve as opposed to purely linear scaling, as this would allow an even greater level of control. Making the system aware of the type of sound the emitter is playing could be beneficial as well, as it would allow for content such as dialogue to have a lower mix of early reflections to improve intelligibility. The one key benefit to building the entire system within Unreal Engine 4 is that anyone with knowledge of blueprints could conceivably modify the system to suit their needs, making it almost infinitely expandable and customisable. Many of the planned improvements simply involve making certain parameters more accessible, intuitive or user friendly to aid in this process.