Game Sound Vs. Film Sound

There are a number of important distinctions to be made between sound design for linear media such as film, and sound design for interactive media. The first and possibly most important distinction is that fact that in film, a given sound effect can be considered a single, discrete event that occurs at an exact moment in time. No matter how many times the film is replayed, the sound will always play out in the same way. In interactive media, control of the same event may be passed on to the player, and as such may be repeated many times in a number of different contexts. This creates the potential for highly repetitious sound design, which in many cases is undesirable for reasons that shall be discussed shortly.

The Need For Procedural Sound Design

At this point it is important to draw up a distinction between non-diegetic ludic sounds that are designed to notify that player about the state of the game, and diegetic sounds that arise from player interactions with the environment. Take for example the sound played in Metal Gear Solid (Konami 1998) when the player is spotted by an NPC guard (see video below).

This short fanfare is heard by the player, but is not heard by the player’s character or any of the NPCs, and as such is considered non-diegetic. Its sole function is to notify the player that they have been spotted and must take evasive action to avoid being killed, making it a ludic notification sound. This sound is required to be repetitive and consistent every time it is played in order to clearly communicate this message to the player, making variation undesirable. Now consider the sound that is played when the player fires a gun in Battlefield 1 (Electronic Arts 2016). This sound exists within the game world, can be heard by the player character, and most importantly is created as the result of a simulated physical process.

In the real world, no physical process will ever produce the exact same sound each time it is repeated. As Stevens and Raybould (2016 p. 59) surmise: “Even a repeated mechanical action is subject to slight changes because the materials have changed since the last action (through change in temperature or even the loss of a few atoms!), and of course the sound is travelling through air and reflecting around an environment, both of which add their own variations to the sound.” Clearly if a sound designer is going to simulate the real-life behaviour of a weapon firing with any degree of accuracy, then sonic variation will be required.

Creating variation

One approach to creating variation in weapon sound effects could be to simply create a large number of separate gunshot audio files, each one slightly different to the last. This is not an ideal solution however. Each audio file must either be loaded into limited RAM or read directly from the hard drive, so the greater the number of audio files required, the larger the demands that are placed either on memory or hard drive bandwidth (Stevens and Raybould 2016 p. 60).

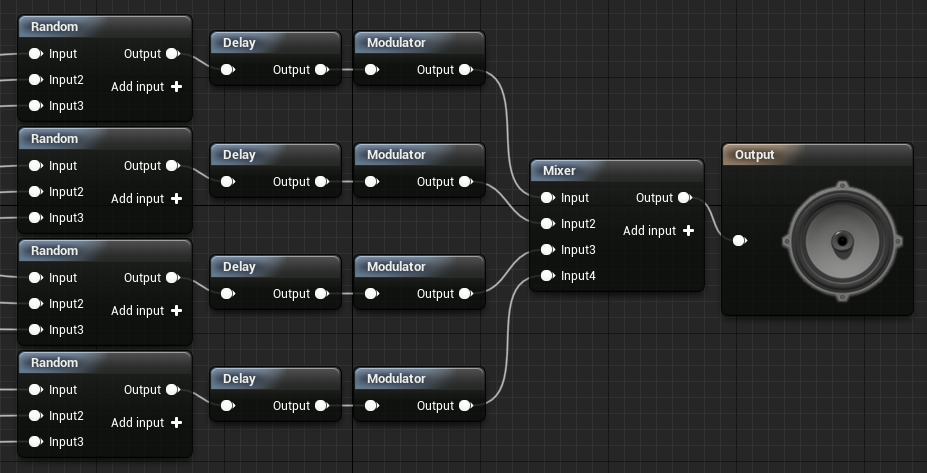

Procedural sound design overcomes this issue by allowing for the creation of a vast number of variants from a relatively small number of initial assets. If the sound designer were to load the constituent elements of the sound event into the game engine and then randomly combine them at runtime then they would be able to leverage the principles of combination and permutation in order to create variation. For example, to create variation in gunshots the sound designer could create four short samples for the initial gunshot transient, four short metallic sounds to emulate the sound of the gun’s slide action, four short samples of the gunshot decaying, and four samples of the shell casing hitting the floor. Any of the transient samples could then be combined at runtime with any of the slide action samples, followed by any of the decay and shell casing samples. In order to calculate the number of potential combinations this creates, the following formula can be used:

c = s1 * s2 * s3 * s4

Where c is the number of combinations and s1 – s4 are the numbers of each type of sound asset. If the figures are substituted then it can be calculated that the above example would yield two-hundred and fifty-six variations. Considering the amount of time it would take to individually create this number of variations, it can be seen that procedural sound design has benefits not only for reducing memory usage, but also for optimising workflow.

There is one other factor to take into consideration here, and that is that fact that combining sounds at runtime shifts the computational burden from the RAM and places it instead on the CPU. However, Somberg (2016 – 2:19) states that the computational load placed on the CPU when summing digital audio is relatively very low. This is because summing digital signals simply requires that each digital sample (here the term “sample” refers to digital sampling theory rather than short clips of audio) be summed with any other digital sample that occurs simultaneously. Clearly the trade off of utilising less memory for a marginal increase in CPU load is a favourable one.

Ambience and Looping Sounds

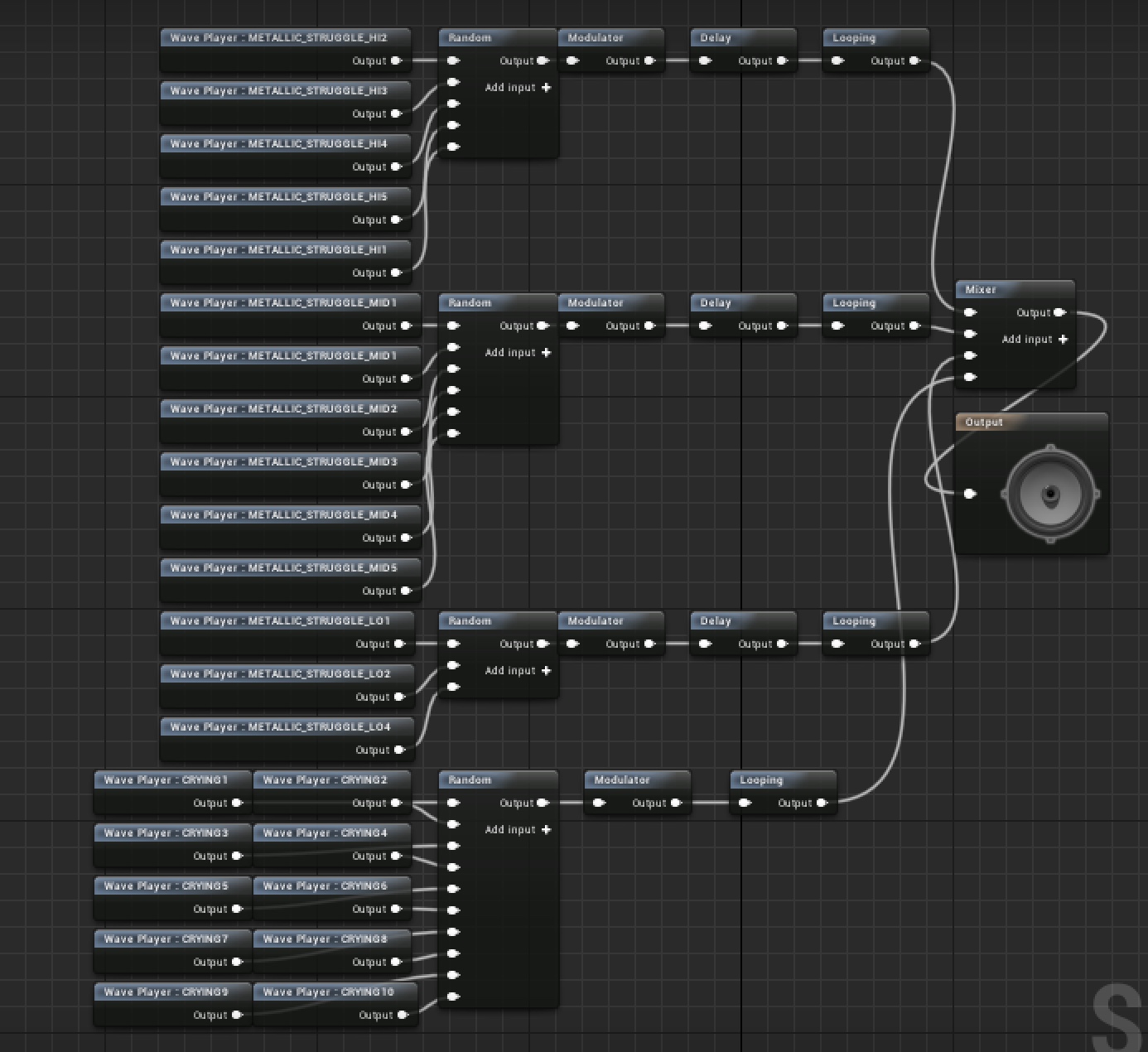

Procedural sound design can also be used to create looping environmental sounds whilst avoiding large file sizes and noticeable repetition, even when the sound to be replicated is repetitive in nature. In the following example procedural sound design has been used to create the effect of a crying baby trapped in a small space for a horror game. A recording of a baby crying was split into individual cries and each cry was then processed with reverb to give the impression that they were emanating from a confined space. These cries were then taken and randomised without repetition within unreal engine. Randomisation without repetition ensures that once a sound has been played, it will not be played again until every other sound has been used, and avoids the same sound being played twice in a row. After this the randomised sequence of cries were routed to a modulator to provide subtle pitch and level variation, before a looper to ensure a constant stream of randomised cries. This was then combined with three types of sound designed to create the sound of movement within the container, which were created by holding a microphone on the side of a metal computer enclosure and tapping the side of the enclosure gently. Each of these metallic sounds were categorised into low, mid or high pitched groups, and were similarly randomised and modulated within these groups. A variable delay was used on each group to ensure that the metallic movement sounds were replayed sporadically, with a random delay value being selected within upper and lower limits. The higher pitched sounds were set to repeat more frequently and the lower pitched sounds less frequently in order to create the illusion of movement. Finally the crying and each of the three metallic sound categories were summed together using a mixer node, and their levels balanced appropriately. The resulting sound cue can be seen below:

A sample of the resulting sound cue can be heard here:

In order to create this sound using one looping sound would require a very long loop, which would not only require a large amount of RAM but also risk becoming noticeably repetitious if the player became able to discern a pattern in the cries or the movement sounds. This could cause a break in immersion and compromise the player’s experience, which would be especially undesirable in a horror game.

This article has only begun to scratch the surface of the possibilities that procedural sound design create for sound designers. Hopefully it has illustrated how valuable these techniques can be, especially as the scope of player interactions increases within games and the need for convincing audio assets scales accordingly.

REFERENCES

Somberg, G. (2016) Lessons Learned from a Decade of Audio Programming – Presented at GDC 2014 [Online Resource] Available at https://youtu.be/Vjm–AqG04Y [Accessed 13th Feb 2018].

Stevens, R. and Raybould, D. (2016) Game Audio Implementation: A Practical Guide Using The Unreal Engine. Boca Raton, FL: CRC Press.

Games

Battlefield 1 (2016) Electronic Arts

Metal Gear Solid (1998) Konami